What is feedback and why is it important?

Feedback takes on different meanings in different contexts.

In cybernetics and information theory, feedback is “the process (underline added) by which knowledge acquired from past experiences informs and alters actors’ choices when they encounter similar situations” (Dictionary of the Social Sciences, 2002). We have studied feedback in this frame throughout much of the Masters program, for example when understanding and depicting feedback loops through causal loop diagrams and other systems mapping techniques.

Feedback can also be used as a noun, which is particularly relevant in management sciences and also product development. In this case, feedback is “information or opinions (underline added) about the performance of a product, system, intervention, or employee. Important sources of feedback include clients and customers, users of a product or system, and an employee’s supervisors or fellow workers…Feedback also plays a role in modern management philosophies of continuous improvement, such as total quality management” (Dictionary of Business and Management, 2016).

The focus of this skill is on handling feedback defined as information or opinions (that is, according to the definition used in management sciences and product development articulated above). This skill includes giving, receiving, understanding, interpreting, actioning and reviewing feedback.

Being able to handle feedback is considered a fundamental skill in product development, particularly in Agile environments (Beck et al, 2001). In the context of our cyber-physical system build, being able to undertake feedback processes is one mechanism for improvement of our product. Being able to handle feedback is also a big part of understanding the wider system within which our cyber-physical system is being built, by engaging with different perspectives.

What we, as a team, found quite early on, however, was that we were receiving a myriad of different types of feedback (some conflicting, some helpful, some unclear) and we were uncertain about how to proceed with the feedback.

Some questions emerged:

- Do we really understand the feedback being given? This sense of understanding is not just about the semantics of the feedback, but also understanding the context within which the feedback was given, the intention of the feedback, the consequences of the feedback if actioned, and the relative weight or importance of the feedback from the feedback in the ecosystem of feedback received.

- What do we do with the feedback? How do we evaluate the usefulness of the feedback and decide whether we should act on this feedback or not? How do we capture the feedback, recognising that it may become useful or actionable at a later date?

- How do we make decisions about what to do with feedback collectively as a group? We are working in a deliberately multi-disciplinary team for the Cyber-Physical System (CPS) group project. Consequently, our interpretations of the feedback differ, the weight / importance we place on different pieces of feedback can differ and the ways we might address it can differ. How do we balance valuing the different perspectives of each team member with swift decision-making to move forward? What do our decision-making styles say about who has power in the group and how might we mitigate against our own biases?

This portfolio piece is an attempt to capture questions, methods, experiments and learnings from grappling with feedback. It is intended to be useful for future students of the Masters program as well as product managers and others dealing with feedback as they design and build their products.

Context setting: What do our touchpoints with feedback look like throughout the Build course?

There are a variety of ways in which we are interacting with feedback during the course. This reflection will focus on feedback in the context of the CPS group project where we are experiencing feedback in the following ways:

Giving feedback: We are asked to give feedback to other teams and also feedback on our other team members.

Receiving feedback: We are receiving feedback from other teams, from the 3AI Build team and from other stakeholders. This feedback is generally solicited, for example feedback in response to our design brief.

Understanding, interpreting, actioning and reviewing feedback: To date, this has largely been done within our own team processes, with some assistance from the 3AI Build team. It is here where we will focus our learnings and reflections.

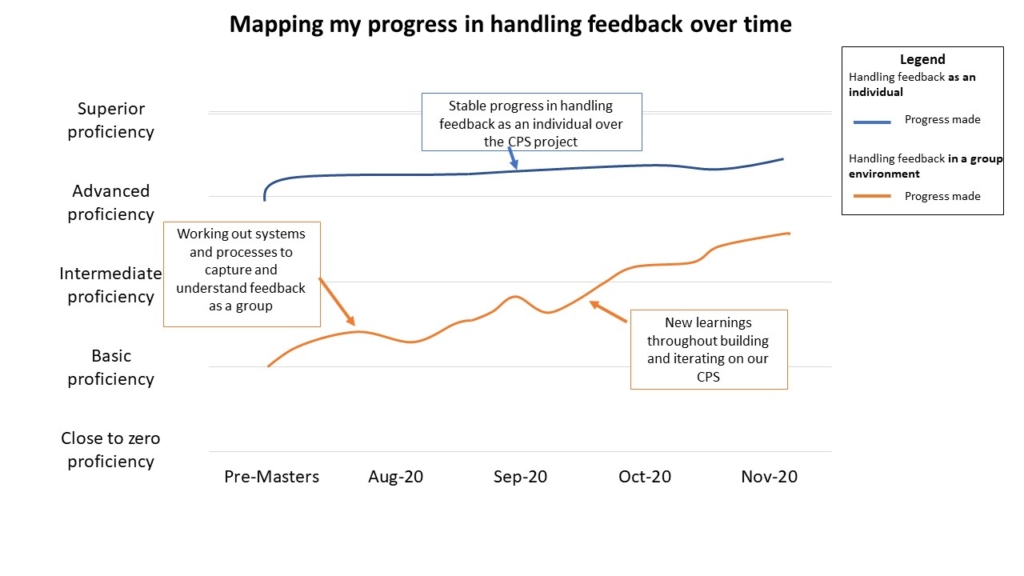

Context setting: Skills proficiency – starting point, over time, and expected

In my previous experience as a strategy consultant I managed clients and a team of people. Feedback was commonplace in this context – soliciting, receiving and acting upon feedback on a report for example, giving feedback on team members’ performance and receiving feedback on my own performance. In pretty much all of these cases, however, feedback was considered, evaluated and actioned in a very individual context. In this context, I would say that my proficiency in handling feedback is in the Advanced – Superior proficiency range. This skill didn’t move a whole lot throughout the course.

In the current context, however, consideration of and decision-making around feedback occurs in a collective context. It’s not just about my own interpretation, evaluation or desired actions; all of these activities are done in a multi-disciplinary team. In this context, I believe my current level of proficiency is basic. Whilst I have had to consider feedback in the context of a team environment before, I have generally done so with a level of authority through my position and experience to steward the feedback process. This case is very different: the feedback received is often in areas far outside of my understanding, there is no ‘boss’ or formal roles of authority and we’re deliberately trying to value a multi-disciplinary approach. All of these factors make handling feedback more foreign and more complex. See Figure 1 for a depiction of my in handingly feedback over the course of the semester.

To continue to improve, I believe I would need to:

- Further investigate software packages that assist with managing feedback at volume in a product manager environment; and

- Continue to build on how to integrate regular review and integration of feedback into team processes and built a team culture of embracing feedback.

Understanding, interpreting, evaluating and actioning feedback: an exploration

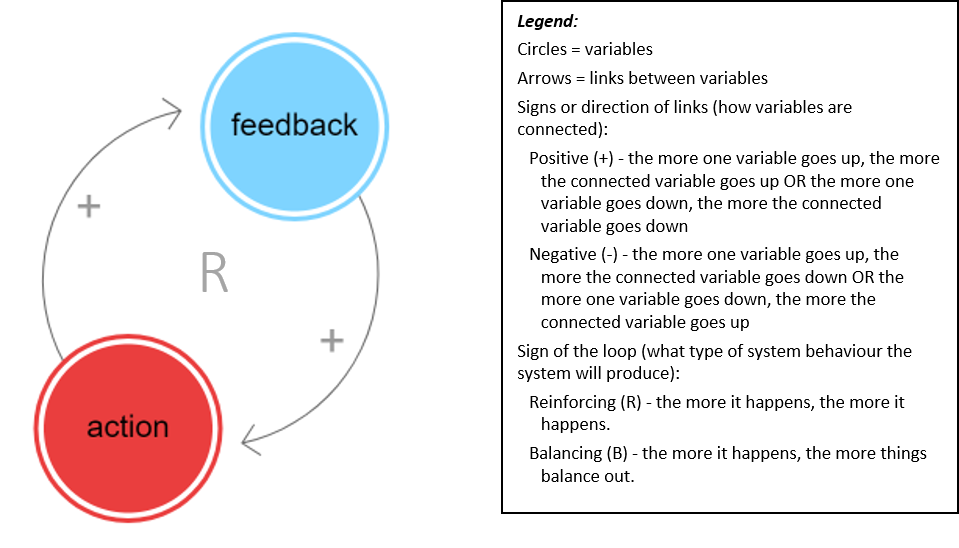

Broadly speaking, feedback is considered a core part of product development and is a pre-cursor to taking some action or performing an iteration on a product. In a very basic frame, it could be said that the more feedback that is received, the more actions are taken to iterate a product, which leads to seeking feedback on the next iteration and so on, in a reinforcing loop (see Figure 2).

There are lots of ways in which the role of feedback, when considered in this simplistic framing of Figure 2, is misrepresented. For example, the diagram doesn’t take into account the limitations of time and resources on taking action on the feedback, or even if the feedback is a good idea in the first place.

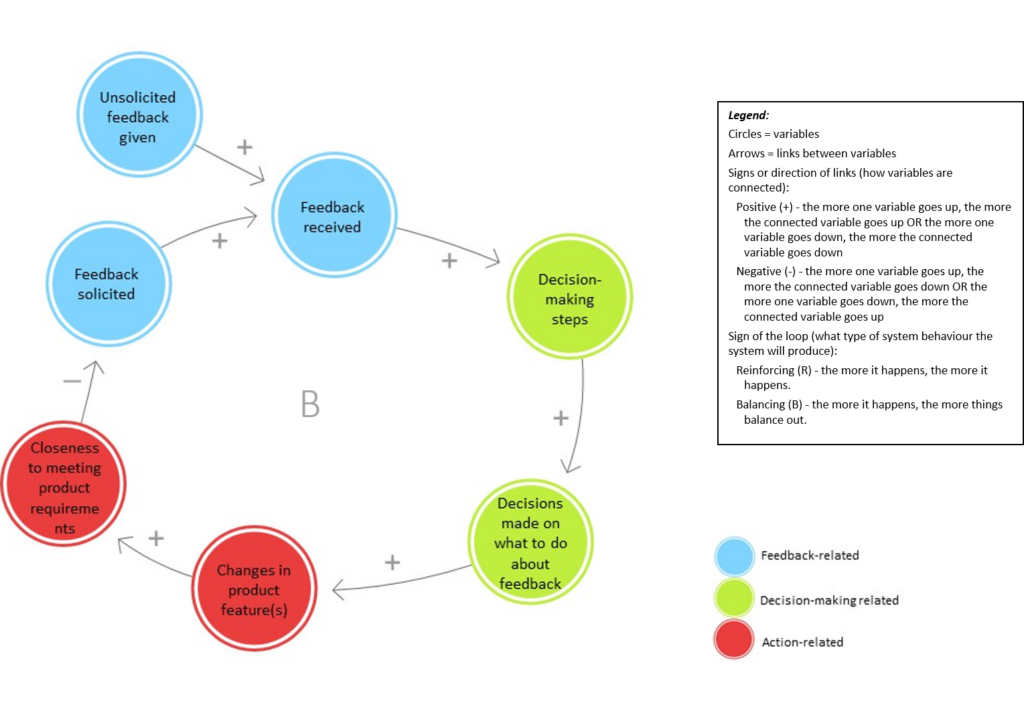

Including decision-making steps of understanding, interpreting and evaluating feedback are important mitigating steps before action is taken, as represented in Figure 3. Further, feedback processes are not infinite, generally constrained to a requirements specification document which is connected to time and budget constraints. In an ideal world, as more feedback is integrated into a product, the closer that product is to meeting the desired requirements and the less feedback is needed; that is, a balancing loop is created (see Figure 3).

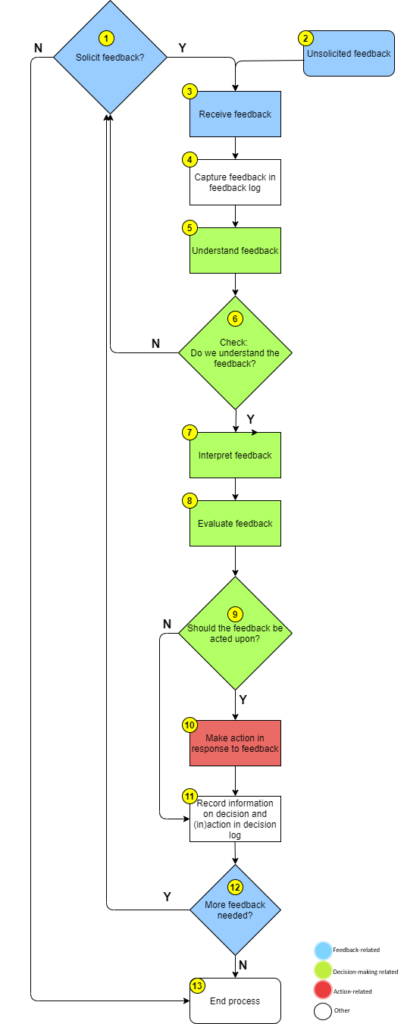

The focus of this reflection is on the decision-making related steps of Figure 3. An approach to working through the decision-making steps of understanding, interpreting and actioning feedback is emerging in the context of our CPS project and is depicted in the decision flow diagram at Figure 4. It is hoped that this begins to articulate a systematic approach that could be repeated and applied in different contexts. Further details are explored below.

There are a few underlying assumptions specific to our context that warrant making explicit. This process has emerged relevant to our context and our context has some specific characteristics including:

- We are a team that has discussed and agreed upon the importance of feedback. We have made it a part of our ‘team philosophy’ that we intend to learn from feedback and are motivated to consider it with a sense of importance.

- We are operating in an academic environment which offers various freedoms such as – we can focus our attention where we see fit as long as its aligned with the marking rubric, we are not beholden to an external funder or their changing requirements or timeframes and we potentially have much less at stake if things go pear-shaped.

- Our focus is on learning first and foremost, whereas in an industry environment this may come lower on the list of priorities to on-time delivery, quality or customer satisfaction.

Having said that, it is believed that these steps could be applied and adapted to contexts much broader than our own.

1. Deciding on whether to solicit feedback

This is a strategic question for the team. Reasons we have solicited feedback included, when we’ve needed advice on a particular component or idea, when we’ve presented our design brief, when we did some user testing of our prototype Before soliciting feedback, it’s important to understand the purpose of asking for feedback and decide on whether you’re asking the right stakeholder to achieve that purpose.

2. Unsolicited feedback

Unsolicited feedback can occur when a person has had some contact with the project and is keen to give feedback without being asked. This can happen in an ad-hoc way when discussing project progress for example.

3. Receiving feedback

Feedback can be received in many formats. We have received feedback orally when asking questions of the Build team around various aspects of our project and through the questions that were asked of us as part of the Design Brief presentation panel. We have also received written feedback from another team and from the design brief presentation panel and the 3AI staff in response to submitted assessments. We have also received feedback through observation of our CPS with users e.g. on Demo Day . The way in which the feedback is received may influence the way that it is interpreted as well as the way in which it is recorded.

4. Capturing feedback

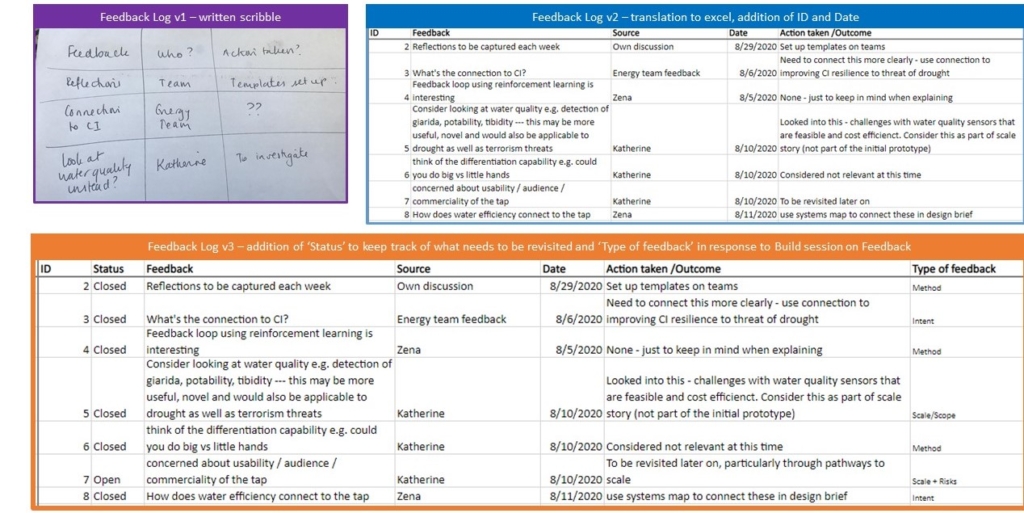

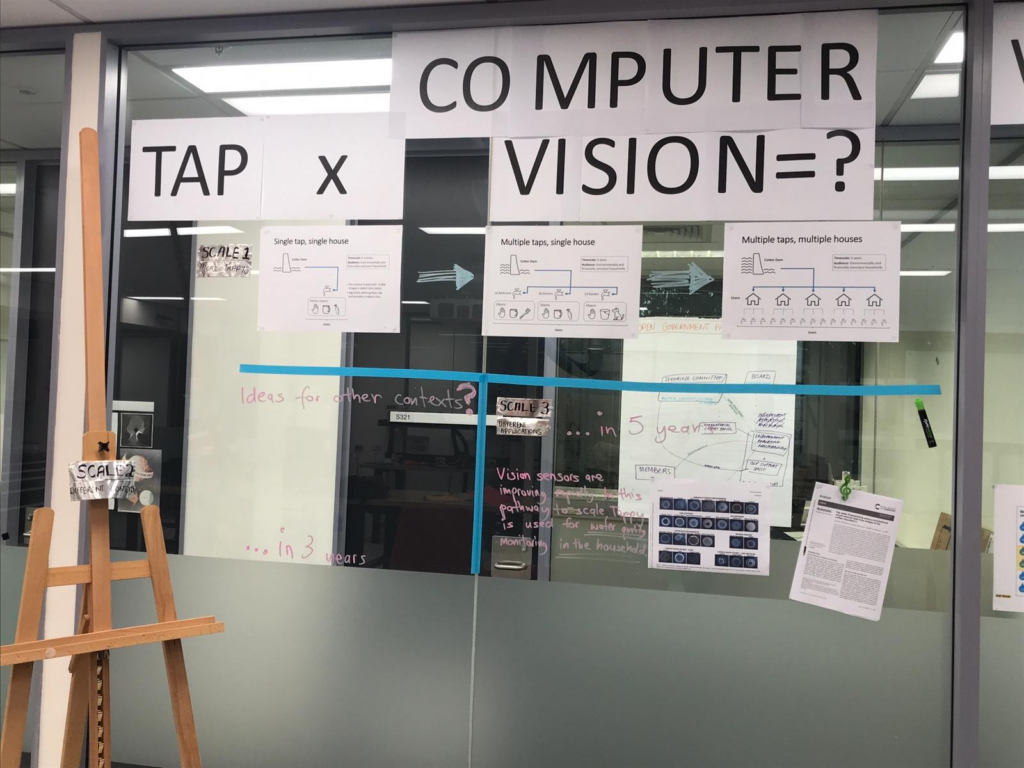

It became clear quite early on that we needed a way of capturing the feedback we were receiving. Initially this took the format of pen and paper. But soon thereafter, we realised we needed a common view and created an excel sheet with some column headings that lives in our Teams site for collaboration and common viewing and contribution. The labels chosen for the column headings are quite important and are work in progress. We have already iterated on these headings following a Build workshop focused on feedback and seeing a feedback framework in that session. As a result of that session, we added an additional column that classifies feedback according to the following categories – Intent, Method, Scale/Scope and Risks. See Figure 5 which shows the genesis of our way of capturing feedback.

Sherif Mansour (2014) indicates in the Atlassian summit, that choosing the fields (or column labels) needs to be done carefully and kept as simple as possible. Throughout the semester we revisited the columns and stuck with this format throughout.

You can download a template for your own feedback purposes here:

It is also apparent to us that this system of logging feedback may be better served outside of Excel, for example in git or in JIRA or Confluence. We made use of git minimally throughout the project and would look to use it more in the future.

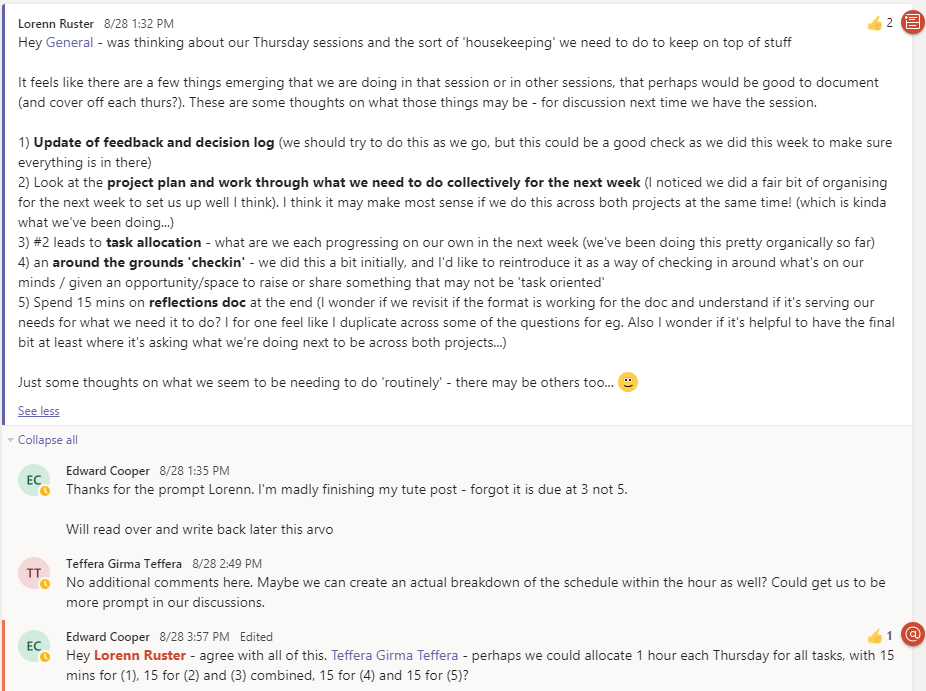

Also of note at this stage is that capturing feedback is not just about the feedback log; the log itself needs to be accompanied by project management rhythms to ensure that the feedback log is updated regularly. In August we had begun to formalise these project management rhythms to ensure that the feedback and decision logs are updated regularly (see Figure 6).

We set ourselves a goal of checking in with feedback and reflections after a stakeholder interview or otherwise once every week. Over time we found that perhaps this was too ambitious and moved the check of the log to once a fortnight.

5. Understanding the feedback

Understanding feedback can take on many forms. In some cases, the feedback is easy to understand and in others, it may be difficult to grapple with. Understanding feedback is not just about the content, but also trying to understand the feedback in a wider systemic context.

Buckingham & Goodall (2019) posit that feedback is more a function of the giver than of the topic discussed and goes on to say that it would be more useful to think of feedback as very personal to the person rather than an objective statement.

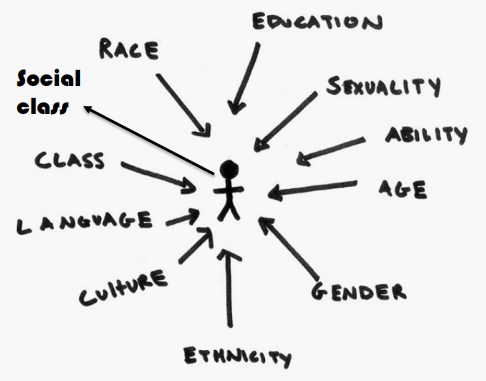

Indeed, feedback is a function of a person’s context and needs to be considered in a much wider systems view. See Figure 7 as an initial exploration of the various elements that influence the feedback given that borrows from social research theories of positionality (Khan, n.d).

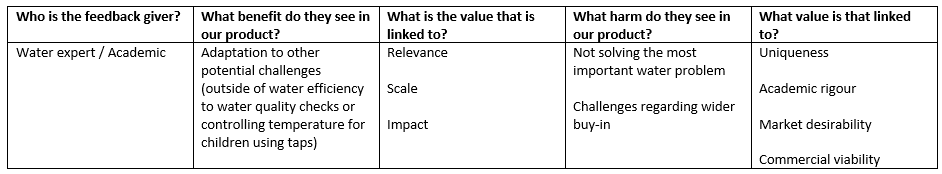

Another method of understanding feedback could be gleaned from Value Sensitive Design which could be used to uncover intentions associated with feedback. An example of how we have used this to work through why particular feedback may have been given in this way is outlined below (see Figure 8).

In the example shown in Figure 8, we were really grappling with understanding the feedback received and whether we should use it to pivot in a substantial way. Talking through the values underpinning the feedback given helped us understand the feedback and work through what was in tension with our own values as a team. In particular, we had previously come to terms with the fact that our prototype may not be the most impactful in its prototype phase, choosing to value feasibility of what was possible in the timeframes more. This led us to considering the feedback in the context of visions for scale, rather than feedback to pivot our prototype itself (see Figure 9). Similarly, the value of market desirability and commercial viability was much higher from the feedback giver which makes sense due to their context in researching and implementing solutions in the water field. For us as a team however, we recognised that a core value driving us was less about the product becoming marketable by the end and more about what learning opportunities it provided us. This context helped us to discern how to approach the feedback given.

6. Check if we understand the feedback

If the feedback is unclear, then there is always the option of going back to the feedback giver to further understand or unpack the feedback, or equally of dismissing the feedback. Our experience so far is that feedback givers are quite open to a wider conversation. Rationale for dismissing the feedback at this stage should be recorded in the Feedback Log. Although we haven’t had reason to do this yet, it could be envisioned to occur if say, for example, we seek feedback from a stakeholder who is not actually suitable to be giving feedback (e.g. lacks credibility, is not in the appropriate area, has negative intentions etc).

7. Interpreting the feedback

With a solid understanding, interpreting the nature of the feedback becomes clearer. It should be noted at this stage, however, that different team members will likely have different ways of interpreting feedback, particularly if it slightly ambiguous in how it was presented. Exploring these different views is important in making an interpretation that serves the project and is also important in demonstrating that every team member’s voice is valued in the process.

In practice, this has looked like making sure that we hear from all team members on a particular piece of feedback and sense-checking interpretations with eachother through conversation.

8. Evaluating the feedback

It was at this step where we became unstuck quite quickly. In one particular case, we received feedback that really challenged us to rethink our entire CPS project – would we pivot based on this feedback?

In order to work through the feedback, criteria emerged by which we would evaluate whether the feedback is something we action or not. The beginnings of these criteria could be summarised below:

A) Strategy: Is the feedback aligned with our vision of the CPS?

B) Feasibility: Is the feedback feasible to action? (can we do it in the timeframes, budget and available technologies?)

C) Impact: What are the impacts of integrating the feedback? What are the flow-on decisions that would need to be made? What are some potential unintended consequences?

D) Risks: What are the risks that it poses? Are these within the tolerable threshold for our team?

At this stage we are evaluating feedback through a group conversation that covers off these areas. We have not felt the need to formally ‘grade’ the feedback. Perhaps if the stakes were higher or the decisions more complex, we might consider a grading matrix with these criteria and rate each piece of feedback accordingly and use this information as an audit trail for decision-making.

9. Deciding on whether the feedback be acted upon

Once the feedback is looked at through the lens of understanding where it has emerged from and understanding its relative merits and drawbacks, then a decision needs to be made around whether the feedback warrants an action.

The action taken could be to implement the feedback as suggested. However, it could also look like implementing something else that tackles the underlying rationale for the feedback without doing the action suggested. For example, when we heard from a water expert to include water quality measurement into our smart tap, instead of pivoting our whole focus to water quality, we integrated the idea into how the tap may scale (see Figure 9).

Note that at this point, it is completely legitimate to decide that the feedback should not be actioned (or that the appropriate action is inaction).

10. Taking action in response to feedback

The action taken may resemble the advice given in the feedback, or may look different to the advice given but was sparked by the feedback provided.

11. Recording information on the decision and (in)action in the decision log

We quickly realised that a feedback log is only part of the puzzle and we needed to complement that with a space where decisions are recorded. Note that the decision log is not just about decisions made in response to feedback; it may also contain information on decisions made by the team that did not stem from others’ feedback.

See Figure 10 for a snapshot of our decisions log.

It should be noted that, like the feedback log, this is only as useful as it is updated. Accordingly, we discussed how we will keep the feedback and decisions log up to date through a weekly project management rhythm (see Figure 6 above).

We continued with this practice of documenting decisions throughout the whole semester and found that it did assist later on when we need to justify design decisions, but also analyse / forecast what might need to happen if a decision is changed or a new piece of information is added. This format, whist good for listing things, does not do a good job at showing the interconnections between the decisions. More research and understanding may be required to see how we might work towards a more dynamic understanding of the interrelations between decisions made.

12. More feedback needed?

This is an interesting part of the process – determining when feedback is no longer needed can be tricky, particularly when using Agile processes. It could be imagined that we would no longer solicit feedback when we no longer have time to do anything with that feedback.

13. End process

Once no more feedback is needed, then the process is complete. It should be noted that reviewing feedback to understand if a previous piece of feedback is now relevant and needs to be acted upon, or whether something old is no longer relevant, would be ongoing as part of the project management practices.

It is anticipated that this process will not always be followed. Part of the continued reflection for this skill will be around noticing when things change. Can the process be improved or simplified? Are we missing steps? Under what conditions do we shortcut what is being done with feedback (e.g. when time is more scarce or we’re closer to a deadline)? What are the implications of this on decision-making and concepts such as transparency and explainability?

Risks, Feedback & Decisions Log Template

You can download a template to assist with tracking your risks, feedback and decisions below:

A note on application of cybernetic engineering techniques, tools and resources

The following cybernetic engineering techniques have been applied to this skill:

- systems mapping through causal loop diagrams (Figures 2 & 3)

- process mapping through a decision tree matrix (Figure 4)

- analysis of stakeholders through adapting value centred design techniques (Figure 8)

- evidence of experimentation and troubleshooting (Figure 5 & 6)

References

Beck, K. et al (2001). Principles behind the Agile Manifesto. Retrieved from: https://agilemanifesto.org/principles.html

Buckingham, M. & Goodall, A. (2019). The Feedback Fallacy. Harvard Business Review, March-April 2019 Issue. Retrieved from: https://hbr.org/2019/03/the-feedback-fallacy

Dictionary of the Social Sciences (2002). Ed. Calhoun, C. Feedback. Oxford University Press. Retrieved from: https://www-oxfordreference-com.virtual.anu.edu.au/view/10.1093/oi/authority.20110803095813292

Dictionary of Business Management (2016). Ed. Law, J. (6th edition). Feedback. Oxford University Press. Retrieved from: https://www-oxfordreference-com.virtual.anu.edu.au/view/10.1093/acref/9780199684984.001.0001/acref-9780199684984-e-2468?rskey=p97Lfh&result=1

Khan, C. (n.d). Social Location, Positionality & Unconscious Bias. University of Alberta. Retrieved from: https://cloudfront.ualberta.ca/-/media/gradstudies/professional-development/gtl-program/gtl-week-august-2018/2018-08-28-social-location-and-unconscious-bias-in-the-classroom.pdf

Mansour, S. (2012). Building an effective customer feedback loop. Atlassian Summit. Retrieved from: https://www.atlassian.com/company/events/summit-us/watch-sessions/2012/archives/scrum-kanban/building-an-effective-customer-feedback-loop