Week 1 of the Master of Applied Cybernetics at ANU’s 3A Institute and we are immersed in a multitude of perspectives that certainly leaves more questions than answers.

That’s intentional. And part of our training in comfort in ambiguity amongst other things.

Recognising that a technology has a history and connecting it as far back as possible to that history is like a sport for the folks at the 3AI and I dare say a skill we will be building this year. For example the invention of a dual entry book, tape and punch cards eventually leads to the creation of Excel!!?!?! (As an aside: I think this could make a rather marvellous (board?) game of connecting technological advances in the past to today’s technologies, domino-style!). A tendency to tidy up edges is recognised as a colonialist concept. An opening of mindsets to the ‘messy’ is begun. A deeper understanding that a history told reveals as much about the teller as it does the object has been at the core of the first week’s readings and discussions as part of our “Questions Course”.

We unpacked the 1956 Proposal for the Dartmouth Summer Research Project on AI agenda as an ‘iconic moment’ in setting the AI Research agenda that arguably still persists to today. In addition to understanding the content of this agenda, we also looked more closely at the context. Understanding the context included understanding who was attending this meeting (for a start, a group of white, male researchers) and at what time (1956 – a time where the biggest threat to the US was the Soviets). These keys led to interpreting the research agenda in a different light. Teaching machines how to use language: interpreted as with the intent of understanding Russian. Teaching machines to form abstractions and concepts: connected to making sense of things like democracy, power and freedom. Teaching machines to solve problems reserved for humans: connected to weapons. etc.

We also discussed that in the 1940s and 1950s a cross-sector group of people (biologists, logicians, psychologists, anthropologists, mathematicians and ‘gossips’) convened around the topic of cybernetics which asked a different set of questions that involved the concept of unconsciousness, of the nature of humanity, the environment, gender, race and ecosystems and others. Somehow in the decade leading in to the Dartmouth AI Research Agenda of 1956 the conversation had taken on a reductionism to all humans equalling intelligence (which is then reflected in the nature of the Dartmouth research agenda which does not explore notions of consciousness, emotions etc).

All of this leads to some interesting questions:

Why did that reductionism occur?

Why did the Dartmouth 1956 agenda stick?

Whose history is being told?

Whose worldview is being promoted? And why?

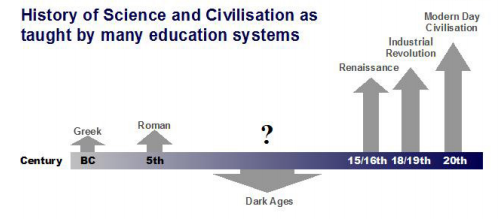

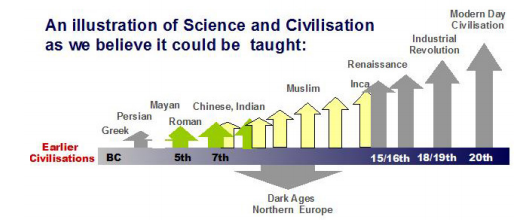

Next up, a small snapshot in to the history of periodisation (Green, 1995). Sounds rather dry on first glance, however I found it fascinating to really question the historical periods of time as I know them or may have learned them in the classroom. To think about the historians working in the background determining what constitutes a ‘period’ and why is something I have never found myself pondering before. Diving in to this question has a lot of implications today as embedded in these decisions are particular worldviews about humankind continuing to ‘progress’ and what constitutes ‘progress’ across the ages. Ultimately within each decision, is an embedded power to tell a particular narrative and to ignore / mute / misrepresent (consciously or unconsciously) the other narratives that exist concurrently. One of the best illustrations of this was found in another reading that talked about the journey of automatic machines in Muslim civilisation and how it is generally not included in what is taught in the History of Science and Civilisation in many education systems.

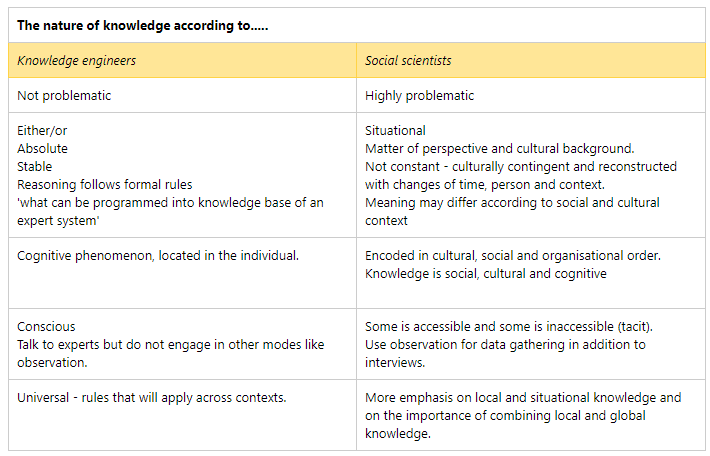

Perhaps most explicit in spelling out of how different knowledges are created and interpreted was Forsythe (1993)‘s article comparing knowledge engineers and social scientists and their views on knowledge creation.

I couldn’t help but empathise with the social scientist perspective trying to operate in the world of knowledge engineers, comparing this scenario to doing consulting infused with Indigenous ways of being, doing and working (the focus of what I was doing immediately before becoming a student for this year). Forsythe (1993) suggests some social science training for knowledge engineers as a potential remedy. Though I don’t disagree, in my view there’s a mutual meeting of minds needed from both sides. To somehow co-create a way forward to work together instead of just adopting parts of the other’s view and trying to integrate it into your existing structure sounds tricky but needed. I suppose that’s part of building the new branch of engineering goal of 3AI.

I’m left with a lot of questions like:

How stable are our worldviews anyways?

What does that process of worldview transformation look like in practice?

We also engaged in a historical account of Robert Noyce – founder of Intel and many would say of Silicon Valley as we know it today. We endured a very paternalistic account of the implications of the introduction of a steel axe into an Indigenous community, written in the 1950s and contrasted that with a recent booklet on Aboriginal Engineering printed in 2017. A Ray Bradbury fictional short story featuring the activities of a ‘smart house’ was included as well as the World Economic Forum’s view on the 4th Industrial revolution. Becoming more deeply acquainted with Prof Genevieve Bell’s talks began via this online keynote and then continued later in the week where gave us a series of talks she has delivered over the last decade in 5 hours!

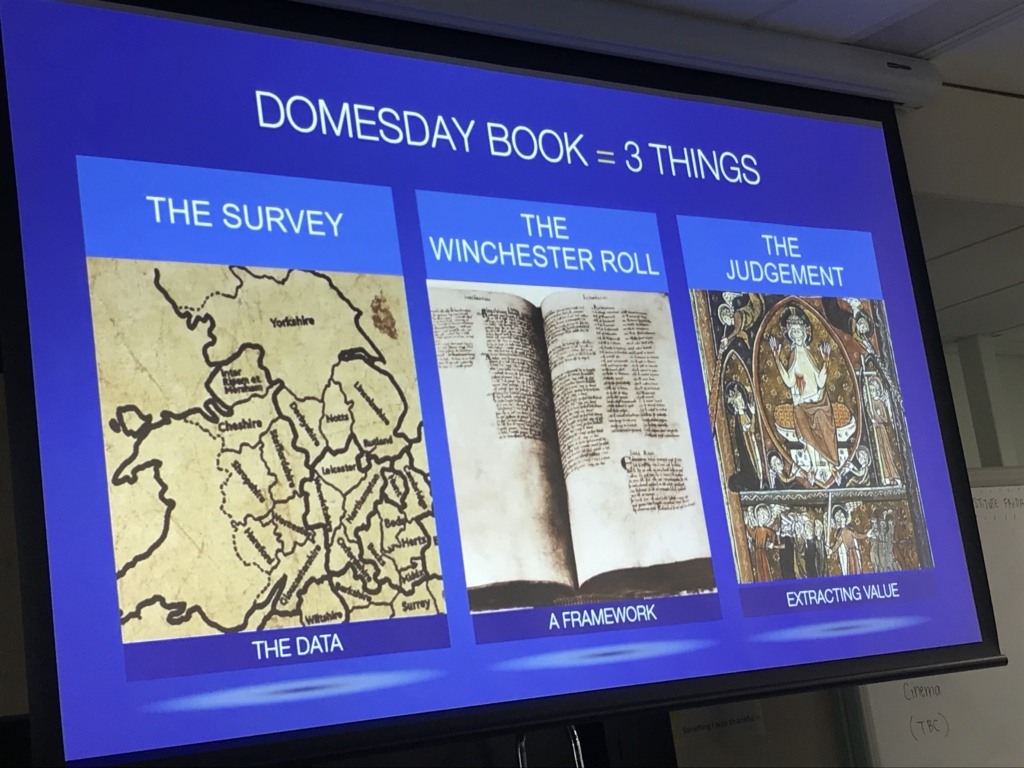

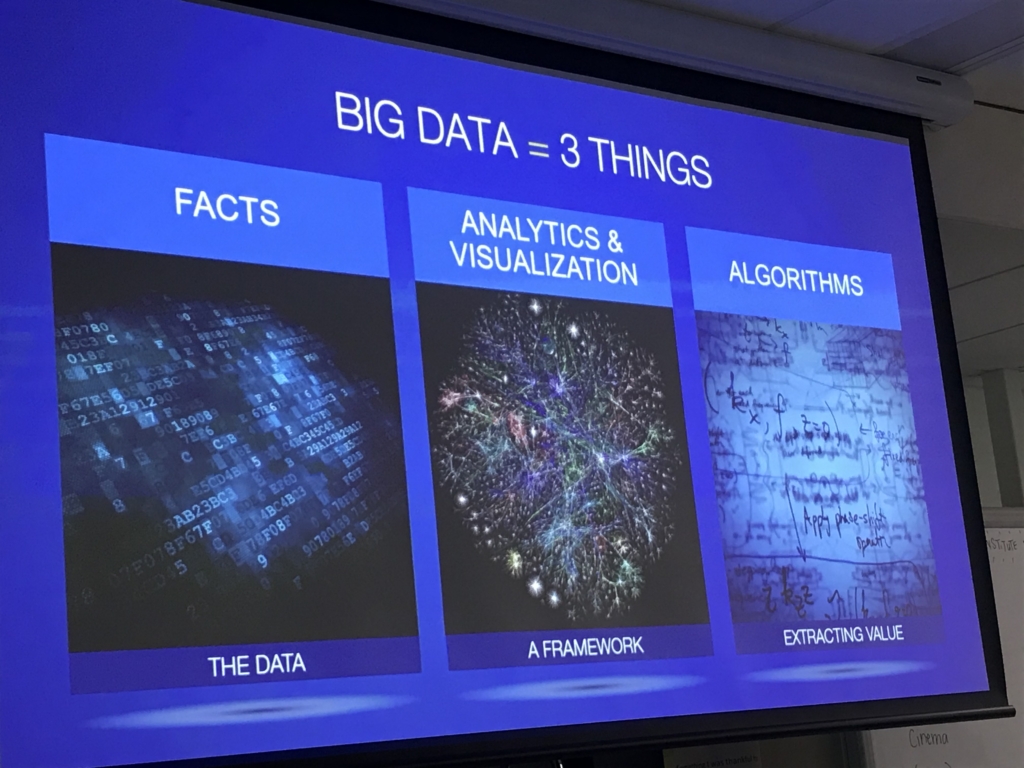

It was like binging on ‘intellectual powerhouse Netflix’ but in real life and with anecdotes of what was happening for Genevieve and the world at the time of each talk. Some highlights: tracing big data back to William the Conqueror and the Doomsday Book, uncovering a pre-history of robots and exploring how to decolonise AI through mapping the mundane, reading against the grain, identifying the subaltern stories and theorising it into practice. (This is just a flavour!)

And who could forget the short excerpt of the Japanese buddhist robot preacher and the thought-provoking story of Nacirema.

And this is just one part of the course! And this is just week 1 of history as 3AI students unfolding for us!!

Post navigation

1 Comment

Comments are closed.

I’m fascinated that a view back in time reveals one or many pathways, some intersecting and others divergent, some redundant and others enduring, along which have emerged successive iterations of technologies, some of which transform or morph, and others of which apparently survive as conceived.

What pathways are emerging and how will these facilitate the development and delivery of next generation technologies, for what purpose? Perhaps it’s not so much about the specifics, but more whether there is an ecosystem model that might foresee or foster the emergent.